Another Expert Just Damaged His Reputation By Relying On AI

It's one shortcut that can cause some big headaches in the long run. Giphy

Giphy

News that is entertaining to read

Subscribe for free to get more stories like this directly to your inboxAlthough the potential benefits of rapidly advancing artificial intelligence are certainly worth consideration, concerns about the use of such tools — particularly in high-stakes professions — don’t seem to be subsiding.

That brings us to the recent case of Jeff Hancock, an expert in misinformation who founded the Stanford Social Media Lab.

ChatGPT hallucinates again

AI chatbots can access an unimaginable amount of data in fractions of a second and produce generally precise and coherent responses to just about any query. But once in a while, a few wires get crossed and the algorithm produces something patently false, if not offensive or dangerous.

And when authoritative figures use AI to speed up or automate parts of their research, those so-called “hallucinations” can cause big problems.

Hancock found that out when a review of a recent legal document he submitted in Minnesota determined that some of the supposed facts therein were entirely fictional.

Attorneys on the other side of the case brought up the fabricated citations and insisted that the “unreliable” affidavit be stricken from the record.

The devil is in the details

Given Hancock’s field of expertise, the presence of AI-generated misinformation in his own affidavit drew particularly swift backlash.

Amid mounting pressure, he ultimately admitted the declaration he submitted as his own had been partially drafted by ChatGPT … but he went on to offer some context in hopes of salvaging his credibility.

“I wrote and reviewed the substance of the declaration, and I stand firmly behind each of the claims made in it, all of which are supported by the most recent scholarly research in the field and reflect my opinion as an expert regarding the impact of AI technology on misinformation and its societal effects.”

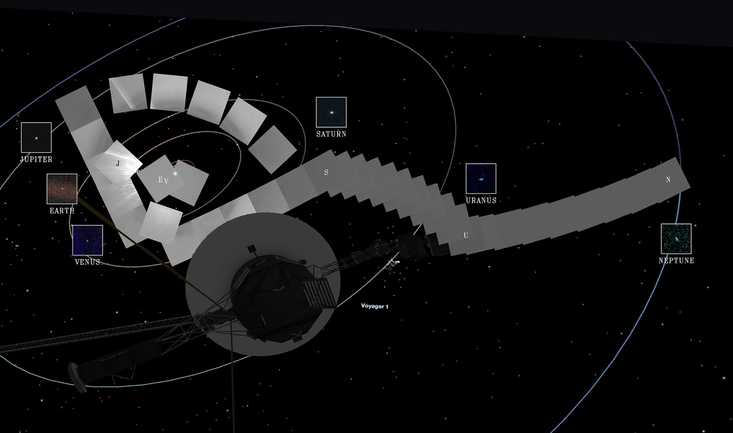

Why Is The Aging Voyager 1 Probe Sending Back Incoherent Communications?

It's been speaking gibberish for a few months and officials are concerned.

Why Is The Aging Voyager 1 Probe Sending Back Incoherent Communications?

It's been speaking gibberish for a few months and officials are concerned. One Woman’s Massive Donation Is Wiping Out Tuition At This Medical School

Her inheritance came with the instruction to do "whatever you think is right."

One Woman’s Massive Donation Is Wiping Out Tuition At This Medical School

Her inheritance came with the instruction to do "whatever you think is right." Woman’s Pets Will Inherit Her Multimillion-Dollar Fortune, Not Her Kids

It's not the first time four-legged heirs were named in a will.

Woman’s Pets Will Inherit Her Multimillion-Dollar Fortune, Not Her Kids

It's not the first time four-legged heirs were named in a will.